Post: SMBC Encoding Issues

Table of Contents

1. Introduction

1.1. Background

I like Saturday Morning Breakfast Cereal. I also tend to forget a lot of things. This interferes with my ability to take unprompted action, especially if nothing would later remind me of the thing I forgot to do.

Therefore I use an RSS feed reader to subscribe to things I like, so that I don’t have to keep re-discovering likeable things anymore (I have re-discovered SMBC several times over the past half decade, reading the comics for several weeks before forgetting its existence again for months or even years).

Recently Zach of SMBC has started adding blog posts below the comic, which I was happy to discover were also included in the RSS feed. But I wouldn’t be writing this post if it weren’t for the-thing-that-we-hoped-would-have-ended-ages-ago-because-of-UTF-8: encoding issues! (And possibly some other things.)

1.2. Disclaimer

I’m not an expert on anything you’ll encounter in this post. I’ll very probably make glaring mistakes, if I even get anything right at all. This is just a semi-live report of my dive into what caused the things I noticed.

1.3. The issues

There are three things that seem to go wrong with the feed:

- Most single and double quotes (also when used as apostrophe) are shown as “

â”. - Many newlines are stripped from the text, turning much of it into one large paragraph.

- The feed seems to dislike the word/phrase “body”.

This third one is very strange, but also the one I have the least to say or

theorize about. The first and second you may have already encountered yourself

in other places, even if not in this form (accented letters like “ë” turning

into the multi-symbol sequence “ë” or things like that).

On this page I’ll be talking about a specific post which exhibits all three issues; I’ve spotted the first issue and I think the second one as well in several other posts, but this specific post is a good example. Since the RSS version has already moved off the feed (only the ten most recent posts are included) I’ll host a part of it here so that you can look at it yourself (most of the text has been removed because I’m not in the business of copying and rehosting other people’s works). If the RSS item just looks like a web page, right-clicking and selecting “View Page Source” or something like that should show you what I’ll be talking about.

2. The first issue: Say “âââ”

For the first issue I had to take a look at the raw form of the RSS feed (the

equivalent of “View Page Source” when looking at a web page), because the feed

reader might be at fault here (though the stray intact quote here and there made

that quite improbable). Indeed, some information was lost in the interpretation

of the feed by the feed reader: where in the feed reader a phrase was shown as

“it wouldâve been”, the feed itself (when looked at in the browser, which shows

the raw XML) looks like “it would’ve been”.

The second phrase contains several HTML escape sequences where I would expect

only one. From the context of the post, it is clear that the “â” should be an

apostrophe, and instead of the expected escape sequence “'” (for an

apostrophe) we find three escape sequences:

- “

â”: This is the only printable character, the “a with an accent circonflex” or “â”; - “

€”: This is a non-printable character, Unicode codepoint 128. - “

™”: This is also a non-printable character, Unicode codepoint 153.

It’s remarkable that we see three characters here, even though only one can be printed. This immediately raises the suspicion of encoding issues involving, at one end or the other, UTF-8. This is the only character encoding I know of that may encode a single character as 3 bytes, and if more encodings exist that may do this it is certainly the most used one.

2.1. ASCII, UTF-8 and text encoding in general

I’ll stick to the characteristics of text encoding schemes that are relevant for telling you about my observations and skip explaining the entirety of Unicode and UTF-8; if you are interested, these videos provide a nice introduction: Numberphile with Tom Scott explaining the history of text encoding and how UTF-8 works; and EmNudge visually explaining Unicode and several of its different encoding schemes (UTF-32, UCS-2, UTF-16 and UTF-8). If you know about the concept of Unicode just being a list of symbols and their identifying numbers (called “codepoints”), then this part of the Wikipedia page on UTF-8 could just be enough.

Also, if you just want a list of Unicode points (I’m not sure if it’s complete, but it contains everything I need) there’s this file.

2.2. Translation from HTML escape sequences to bytes

HTML escape sequences represent characters that are not in the printable section

of ASCII (for instance, accented letters: “â” for “â”) or that have a

special meaning in HTML (for instance, “>” for “>”). This preserves them

when transmitting HTML trough connections that can only handle ASCII and allows

you to use special HTML characters in text without accidentally writing

(possibly invalid) HTML.

If we assume that the sequence of three escape sequences mentioned above

represent a series of UTF-8 bytes interpreted as some sort of extended ASCII (be

it either Windows-1252, ISO 8859-1, ISO 8859-15 or several others like these),

we can trace them back to their original bytes. In this case I’ll assume the

bytes were interpreted as one of the three I’ve mentioned; it doesn’t matter

which, because “â” is assigned the same codepoint in each: number 226. Since

I’ll be talking about numbers in several bases, I’ll start appending their base

(in decimal!) as a subscript; this way I hope I can keep things clear.

So, “â” is assigned codepoint 22610 in these extended ASCII encodings. In

binary, that’s 1110 00102 (split in groups of four digits for readability). A

UTF-8 encoding would see that as the first byte of three, which together encode

a single Unicode codepoint. The second and third bytes are simple; the HTML

escape sequences directly point at their numeric values: 12810 (= 1000

00002) and 15310 (= 1001 10012).

2.3. Then, from bytes to a Unicode codepoint

This gives us the three bytes that together should encode a single Unicode codepoint:

| 1110 0010 | 1000 0000 | 1001 1001 |

If we take a look at the rules in the Wikipedia article I linked earlier, we see that the parts of these bytes that together form the number of the codepoint are the parts that are underlined here:

| 1110 0010 | 1000 0000 | 1001 1001 |

Put one after the other (and skipping the zeroes at the beginning), we get the binary number 10 0000 0001 10012 = 821710. Since Unicode codepoints are more usually known by their hexadecimal value, we’ll translate it to that base: 201916.

From the hypothesis that the RSS feed contains mistakes caused by the assumption that the input text was extended ASCII instead of the actual UTF-8, we get the question: does this codepoint make sense here? If it doesn’t, we’ve made a wrong assumption along the way; if it does, we’re probably on the right track.

2.4. Conclusion on the first issue

We’re lucky: Unicode codepoint 201916 is called “RIGHT SINGLE QUOTATION MARK” and it looks like this (between guillemets so it doesn’t disguise itself as part of the quotes): « ’ ». It’s definitely a sensible character in the context; if we replace the nonsense in the RSS feed with it, the phrase looks like this: “it would’ve been”. Looks quite alright to me. I think we’re safe to assume that we have discovered which chain of steps led to the first issue in the feed:

- Zach wrote an essay in software that uses UTF-8;

- The feed producer, assuming the text is written in extended ASCII, produced HTML littered with “

’”. - My feed reader shows me a text where every instance of an apostrophy looks like “

â”

This concludes the dive into the first issue I encountered with the feed; we can state with some confidence that it was caused by software assuming its input was extended ASCII while it was actually UTF-8.

3. The second issue: Broken linebreaks

The second issue is one of line-endings or, more specifically, the lack thereof. The SMBC post in question does not seem to contain any linebreaks once we get to the essay that forms the main content; at least, when I look at it in my feed reader. On the website, however, it seems to contain plenty of linebreaks (making it much more readable), and even when viewing the feed itself in a text editor the linebreaks are present. This calls for a deeper dive; like with text encoding, there’s lots of weirdness in linebreak-land (see this section of the Wikipedia page on newlines for examples).

3.1. 🎵 Newlines are made of this 🎵

Direct inspection of these newlines (for instance, to see whether they consist of just a linefeed, a carriage return and a linefeed, or perhaps (but very unlikely) just a carriage return) is not easy because newline characters themselves tend to be quite invisible in displayed text; their presence can only be inferred from the behaviour of other characters. It would be useful to see what the newlines are made of instead of seeing them as newlines.

What this calls for is a hex editor. We’re not taking a turn to dark magic; a hex editor is a program that allows you to view (and edit; hence the second part of the name) the contents of a file as “raw” bytes. Each byte is shown as two hexadecimal digits (hence the first part of the name), which is much more convenient than binary and takes up less space on your screen. To be honest, we don’t really need a hex editor since we won’t be doing any editing; anything that would show me the file as its underlying bytes instead of interpreting these bytes and showing me readable text would do. But a hex editor will probably be more convenient, since full-fledged editors tend to also have several nice features: searching for phrases and showing the bytes alongside a text version of the file will probably come in handy.

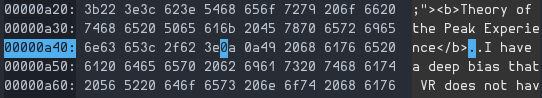

In hexl-mode, a hex editor in Emacs, the first place where a linebreak occurs

in the browser but not in my feed reader looks like this:

The first column lists the line number (in hexadecimal) and is not part of the actual file. The next eight columns show the hexadecimal values of sixteen bytes of the file. The last column, which contains readable text, shows the equivalent text rendering of the bytes in the same line.

The highlighted characters in the bytes and text view represent the same part of

the file: the highlighted byte “0a” is shown as “.” in the text. But if we

take yet another look at ASCII, we see that 0A16 (or 1010) doesn’t encode

the full stop. Instead, it encodes the linefeed character. Apparently, in

hexl-mode, a full stop in the rightmost column also stands for any character

that’s hard to print, of which linefeed is understandably one.

This byte is followed by another “0a”, which makes two linefeeds in a row.

This makes sense, because the newline-containing version of the essay puts an

empty line between all paragraphs (which means two consecutive newlines).

So, the essay contains linefeeds. Then why aren’t they shown by the feed reader?

The answer probably lies in the newlines that are shown: they look like

“</p><p>”. That’s just plain HTML for “end paragraph, begin paragraph”. This

seems to be the thing: the post is HTML, not plain text. The parts of the text

that show as paragraphs in the feed reader are actually HTML paragraph elements.

This includes the essay, which is one large paragraph element.

The question this makes us ask ourselves is: if the essay is one, undivided paragraph, should a browser show the linefeeds contained in it as newlines or should it just ignore them? Which is correct according to the standards and/or practices of modern web development?

3.2. Why web development haunts my nightmares (a small deviation)

My first guess was that the browser simply guesses that it should show the newlines. The modern internet relies on more guessing than I’m comfortable with:

- What to do with invalid HTML because HTML standards before HTML 5 did not specify what to do when a page consists of invalid HTML;

- Whether to render web pages incorrectly

because they were written to look good in old browsers which did not render

web pages correctly;

- In which way to render web pages incorrectly because of course there are multiple ways to do this;

- Which encoding to assume for a web page because many older web pages do not specify which encoding they use;

As far as I know, all this exists because of tensions between the requirements of the different groups of people who together made the World Wide Web what it is today:

- Browser makers wanted to provide the best features to their users.

- Competition was fierce between early browsers (and continued to be so for a very long time), and one way to make users mover from a competitor’s browser to yours was to provide a feature that the other browser did not (yet) support.

- Web page writers did not always support all browsers.

- It’s already hard enough to write a web page that functions in one browser, let alone every browser. Combined with the rise and fall of countless competing browsers, it was (and still is) tempting to choose just one and make sure the site looked alright in that particular browser.

- The W3C was more concerned with descriptive instead of prescriptive standards.

- Development of web technology started off at high speed, and the W3C has

always seemed to sort out the mess of different proprietary additions

afterwards instead of trying to get the browser people to collaborate on a

standard before implementing the next additional feature. I think this has

always been the most realistic approach, but it would have been nice if more

of the basics of the web had been planned and standardised from the start

instead of going through the phases of

- one browser implementing a new feature,

- the competitors implementing equivalent but slightly different features,

- and the standards people having to work out how to support all varying implementations while keeping the standard as clean as possible.

The results of all of this are that it can be quite complex to find out why and how something web-related does the things it does. Therefore it seemed likely to me that heuristics concerning newlines could be part of the history of the web, with the result that interpretations of which newlines to show and which to ignore could differ.

Fortunately, it turned out I was wrong. Also, with the coming of HTML 5 and related things, the web is tidying up nicely standards-wise. This won’t change any existing websites and browser quirks by itself, of course, but it’s still an improvement.

3.3. Not the web’s fault (this time)

While I was looking up how browsers handle newlines and such in HTML, Mozilla’s

web docs site was so nice to tell me that there are standardised ways of telling

the browser how to handle newlines and all sorts of whitespace within an HTML

element. This is done through a CSS property of the element, aptly called

white-space. After finding this out, it would be unwise to not at least check

whether the RSS feed item employs this property.

And there it is, hiding in plain sight: “<span style="font-size: 14.6667px;

white-space: pre-wrap;"> […] </span>” around the whole essay. Setting

white-space to “pre-wrap” tells the browser to respect all newlines in the

text, and on top of that it is authorised to wrap the text where necessary

(meaning the browser will insert additional newlines where necessary to make

sure the text doesn’t overflow from the element).

This means that the browser, when showing all linefeeds as newlines, does exactly what it’s asked to do — and it’s the feed reader that’s wrong! Apparently, the feed reader’s internal browser does not take this CSS property in consideration when deciding how to show the text.

This solves the second mystery that caused the SMBC post to be hard to read in my feed reader.

4. The third issue: “Nobody”, but with no “body”

This may be the hardest to solve, and I fear that it may not even be something we can solve from the comfort of our armchairs. The issue is that in the feed version of the essay, there are several places where we read “no” where it’s quite clear that it should say “no-one” or “nobody”:

- “No in our imaginary pub sings”

- “mostly because no really knew”

- “no in my early life wrote”

When we go look at the post on the SMBC web page, we find that here each of those “no” is a “nobody”. It is indeed the case that each instance of “nobody” in the essay (and perhaps in the whole post; “nobody” doesn’t occur in the post before the essay, so we don’t really know) is truncated to “no” in the feed.

I haven’t the slightest idea what could cause this. The first thing I thought of was something very naïvely removing parts of words that could be HTML tags, but this hypothesis falls apart once you notice that the word “a” (another HTML tag name) is still present in the feed.

Then perhaps it’s just removing parts of words that could be specific HTML

tags; for instance, only tags that shouldn’t occur in the feed item. But then,

why search-and-remove the names of these tags instead of the tags themselves?

It’s not hard to look for “<body” instead of “body”, just to make it a

little bit less likely that you’ll unecessarily remove actual text instead of

HTML tags.

What if something went wrong with the search-and-remove? Maybe the “<” of

“<body” for which it looked was removed by some superfluous escaping or such?

Or what if Zach had a search-and-replace oops?

At this point, I’m just stacking hypotheses on top of each other for no other reason than that I don’t like knowing nothing about the cause at all. Fact is, there seem to be no leads to follow. I’ll have to accept, at least for the moment, that there’s no solution to this problem.

(Unless you know something. If that is the case, please send me an e-mail or mention me in a toot or something like that; I’m dying to know what could cause the phrase “body” to disappear from a text without any other apparent effect).

5. Epilogue

These were the dives into the issues I spotted with the RSS feed of Saturday Morning Breakfast Cereal. I’m curious what you think of them. Did you learn (about) something you didn’t know? Did I make a mistake (or several) and can you point them out? Even if you don’t have much to say, I appreciate any feedback or remark you might have. I’ll add a contact page soon, where I’ll point out how to contact me by e-mail or on Mastodon (I haven’t yet got federation working well with Matrix so that’ll have to wait).